- Client

- Suffolk Libraries

- Sector

- Museums and culture

- Duration

- 1 week

- What we did

- Product innovation

- Design sprints

- Digital service design

The Full Story

The IT department of Suffolk Libraries would like to replace the self-service machines currently installed at around 40 different locations throughout the county.

I can’t recommend this kind of research sprint enough. We got a report, detailed technical validation of an idea, mock ups and a plan for how to proceed, while getting staff and stakeholders involved in the project — all in the space of 5 days.

Instead of betting the farm on one particular solution, they commissioned Clearleft to do a week-long combination of feasibility study and design sprint.

James and Jeremy packed their bags and went to Ipswich…

Day one

In the morning we met with some library managers and library staff from different libraries in Suffolk. We asked them about the problems they were experiencing with the current self-service kiosks.

There was general agreement that it could sometimes be unreliable. It doesn’t deal with intermittent network connections very well. That means that users don’t trust the system.

Everyone agreed that the language and tone of voice on the current interface could be adjusted.

We also asked them what they would like from a self-service machine. There was a wide variety of answers here. Some staff wanted a new self-service machine that carry out the current functionality, but more efficiently. Others wanted the machines to be able to offer more services. It quickly became clear that we’d need to prioritise this wish list. The pains and gains varied from library to library.

In the afternoon we had a discussion with the project owners about the challenges involved. Communicating with the Library Management System (LMS) is currently very problematic because the self-service machines use an old protocol called SIP. But the LMS does have an undocumented web-based API. If that were available, many more possibilities would open up.

We also discussed hardware options: 1D barcode scanners, 2D barcode scanners, or even the camera in a tablet.

Digging deeper, it became clear that a lot of the current issues stem from the self-service machines being one monolithic system. If the functionality could be decoupled—hardware, interface, network—then there would be less lock-in allowing more iteration and experimentation in future.

Then we made a plan for the rest of the week—nothing too specific, but a general plan of action culminating in some kind of playback on day five. But we still weren’t sure whether we’d be creating a prototype in code, or some interface mock-ups. At the very least though, we would provide a feasibility report.

We finished the day with some observations of people in the library using the self-service machines. We were introduced to library staff, all of whom had plenty to say about the self-service machines.

At the end of day one, we had a good sense of the properties any new system would need to embody:

- Reliability

- Clarity

- Efficiency

Day two

The second began with a detailed look at three services provided by the self-service machines:

- borrowing books,

- returning books, and

- renewing books.

We plotted out the flow of each service. It turned out that returning books was actually fairly straightforward (something borne out by our observation of the machines in use)—it’s important that we don’t inadvertently overcomplicate it. Renewing books, on the hand, was overly complex; we could certainly suggest changes for improvement there.

Any remaining questions we had about any of the steps in the user journeys were answered by trying out the machines for ourselves. We had a test library card to play with.

In the afternoon we gathered library staff together for a sketching workshop. After an icebreaker exercise to reassure everyone that drawing skills weren’t important, we all got stuck into generating lots and lots of quick sketches of interface ideas. After everyone played back those ideas, we did a bit of dot-voting to sniff out the suggestions that resonated the most. Then we did a couple of higher fidelity sketches of some of those ideas and critiqued the results.

By the end of the day we had lots of ideas on the board. That was what we wanted—this was the day for divergent thinking. Day three would be all about converging on the core proposition.

Day three

A group of us convened first thing in the morning to prioritise the wish list from the day before. We made three divisions on the wall:

- Minimum Viable Product (first release),

- Second phase (6-12 months out), and

- Backlog (1-2 years).

Together, we took the post-it notes of features and functionality that had been generated on day two, and we started to put them in the appropriate column. Much robust discussion ensued—always a good sign. After a while, we had the items sorted. Now we could focus in on that first column: minimum viable product.

Up till this point, we had tried to enlist as much collaboration and participation as possible. Now we decided to break that trend and get some heads-down alone time. We ensconced ourselves in the reading room and opened up our laptops.

James started comping up interface ideas in Sketch. Jeremy started hacking around in JavaScript. As always when code is involved, there was plenty of yak-shaving—getting the right libraries, setting up a test server that could serve over HTTPS because the getUserMedia API requires it …you get the idea.

By the afternoon, interfaces were starting to emerge and the code was beginning to work. The code was there to test a hypothesis: could a barcode be read from the camera in a tablet. By the end of the day we had an answer to that question. The answer was “Yes!” …in theory. But “no” in practice. The code worked but the accuracy of the barcode-reading just wasn’t anywhere near good enough.

This was disheartening. We had to remind ourselves that getting a definite answer one way or the other—positive or negative—was a fruitful exercise.

Time to rethink the hardware angle.

Day four

Okay. The idea of reading barcodes from a device camera is off the cards. Time to investigate barcode scanning. Assuming that a barcode scanner is connected via USB or Bluetooth, how can that input be passed to a web browser?

In short, it can’t. The security sandbox of the browser (rightly) doesn’t allow access to peripherals. There is a potential solution: making a Chrome App. That’s a way of wrapping up a regular web app into a package that can also access USB peripherals. It works great on Mac, Windows, Linux …but not Android; the platform that would suit the self-service system best.

It’s looking more and more likely that a native Android app is unavoidable. Still, it might be possible to make the app a “shell” that pulls in HTML, CSS, and JavaScript served from the web.

While all this technical research was going, James refined the interface ideas, mocking up user flows with InVision. A quick playback to the project owners verified that the user interface was on the right track.

With the technical questions mostly answered and the user flows mostly sorted, we began to write up our findings. On the last day of the project we’ll need to play back the results of our research, and present a report with recommendations.

Day five

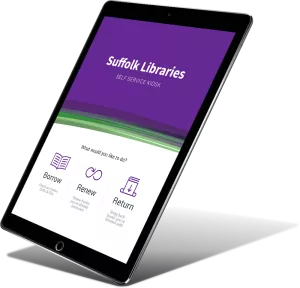

On the final day of this brief but intense project, James finished refining the interface, putting it into a clickable prototype. Just after lunch, we gathered as many people as we could and presented back our findings from the week. We kept it brief and then passed around the prototype on tablets so that people could get a feel for what the user experience would be like.

We spent most of the final day collaboratively writing a report. It contained our discoveries, our technical recommendations, and some early estimates for costs.

And with that, we wrapped up our time in Ipswich and took the train back to Brighton.

You can also read a write-up of this project from the client’s perspective.